Creating the animations

The animated head is based on real articulatory movements. Animations were created in Autodesk Maya, using a 2-D head rig, allowing for control of jaw, tongue, lips, larynx, soft palate and uvula. The head rig was based on a high-resolution scan of a midsagittal slice of the model talker’s head (see Figure 1). Although teeth are not imaged in MRI (as they contain no hydrogen atoms) the position of the upper and lower incisors was reconstructed using interdental articulations and identifying locations where the tongue surface was indented by the biting edge of the incisors.

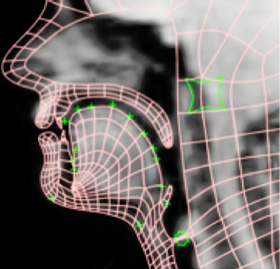

The animation rig was superimposed on MRI videos of the midsagittal section during speech and followed the movements of the vocal articulators (see Figure 2). As the MRI videos had a low frame rate of 6.5 frames per second (fps), the animator interpolated between missing frames with reference to matching ultrasound tongue imaging video and lip-camera videos, resulting in 24fps animations.

Figure 1: Mid-sagittal MRI frame of the model-talker’s head

Figure 2: Animation rig superimposed on the MRI frame

The tongue rig has 13 control points controlling: the epiglottis (2 control points); tongue root (3); dorsum (2); front (1); blade (1); tip (1) and sub-laminal region (3), (see Figure 3). The neck and larynx had controls that permitted two-dimensional movement and the jaw had a control that permitted translation and rotation in order to capture the hinge-like movements of the jaw.

Lips and velum were animated using blendshape deformers, which allowed the animator to morph the shape of the lips and velum between target shapes, e.g. For the velum and uvula this technique allows for morphing between a lowered position that is normal during breathing and a closed position necessary for non-nasal speech sounds.

Figure 3: Animation rig, showing control points

Figure 4: Final black and white animation