Recording MRI

The MRI recordings on this website were recorded at Edinburgh University’s Edinburgh Imaging Facility QMRI at the Edinburgh Bioquarter site with subjects lying prone in the dedicated 12-channel matrix coil of a 3 tesla large-bore Siemens Verio MRI system. Recording protocols were developed by MRI Physicists Dr Gillian MacNaught and Dr Scott Semple. MRI data acquisitions were carried out by Gillian MacNaught and the clinical recording team at CRIC. Audio recordings were carried out by senior experimental officer Steve Cowen (CASL research centre, Queen Margaret University), and MRI videos, with synchronised audio recordings, were created by Dr Satsuki Nakai.

The recording protocol produced c. 6.5 mid-sagittal head scans per second, which were saved in medical DICOM image format, converted into avi video format using ImageJ software (Rasband 1997-2014) and audio was added using ffmpeg.

Stimuli

Stimuli were International Phonetic Association symbols in isolation (where appropriate) and placed in non words, arranged as a power-point presentation and presented to the participant via fibre-optic video goggles.

Recording audio and resynchronising audio and video

We used an OptoAcoustics FOMRI tm III dual-channel, fibre-optic microphone system (see Figure 1 below) to record speech inside the MRI machine. As well as containing no metal parts and being safe to use in an MRI machine, this microphone and associated software have a built-in noise-cancelling system to reduce noise generated by the MRI machine from the acoustic signal. Sound is captured by two microphones – one pointing towards the speaker’s mouth and covered by a pop-shield, while the other is directed away from the speaker to gather the ambient noise inside the MRI machine. The OptiMRI software uses the recording from the upper microphone to help remove noise produced by the MRI machine. The microphone was fixed to the head coil using velcro and the microphone was positioned as close as possible to the speaker’s lips using the microphone’s goose neck. A bite plate recording could not be obtained due to the close proximity of the head coil to the speaker’s face.

Figure 1: Fibre-optic, noise-cancelling microphone

used to record speech inside the MRI machine

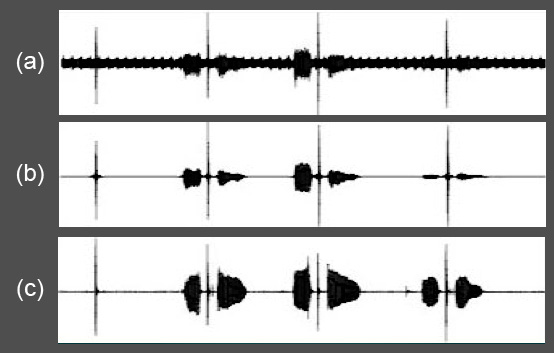

Further noise-cancelling was carried out using widely available software, e.g. Audacity (Audacity Project 2005) and Praat (Boersma, Paul & Weenink, David 2013); however, it was not possible to completely remove extraneous noise relating to the ultrasound machine without removing too much of the speech signal. For the purposes of the Seeing Speech resource, that is, to exemplify sounds on the IPA chart, we opted for “clean” audio that matched the MRI videos by resynthesising (or temporally-modifying) speech samples produced by the same speaker during a UTI recording session using Praat (Boersma, Paul & Weenink, David 2013) (see Figure 2). To ensure that the resynthesised speech samples matched the original recording from the MRI session as closely as possible, the denoised audio recording from the MRI session was presented as an audio stimulus along with the visual prompt during the UTI recording. We also examined the lingual articulation in the MRI and the UTI recording to make sure that they were comparable.

Figure 2: The waveforms of [tʼ] (the alveolar ejective) produced in isolation,

and in three vocalic contexts ([ətʼə], [ɑtʼɑ] and [itʼi]) of audio recordings

(a) during the MRI session, noise-cancelling from the optical microphone,

(b) during the MRI session, with further denoising using Praat and

(c) during the UTI session, time-aligned with the audio recording

from the MRI session using Praat.

References

Audacity Project. 2005. Audacity. 2.0.5 ed. Pittsburgh: Pittsburgh Carnegie Mellon University, October 21, 2013,.

Baer, T., Gore, J.C., Boyce, S. and Nye, P.W. 1987. Application of MRI to the analysis of speech production. Magnetic Resonance Imaging 5(1), 1-7.

Boersma, Paul & Weenink, David. 2013. Praat: doing phonetics by computer. 5.3.47 ed. http://www.praat.org/.

Rasband, W.S. 1997-2014. ImageJ. Bethesda, Maryland, USA: U. S. National Institutes of Health.